Privacy only exists — until it doesn't

For many years now, technology companies have struggled to find the right balance between the need to protect the privacy of end users, as well as prevent their own platforms from being misused to transmit illicit material.

This week, Apple, a company that has long worked to cultivate a reputation for respecting the privacy of its customers and users (calling privacy a “fundamental human right”), announced a new cryptographic system designed to detect child abuse images stored on its iCloud platform (more specifically iCloud Photos), without — in theory — introducing new methods of privacy invasion.

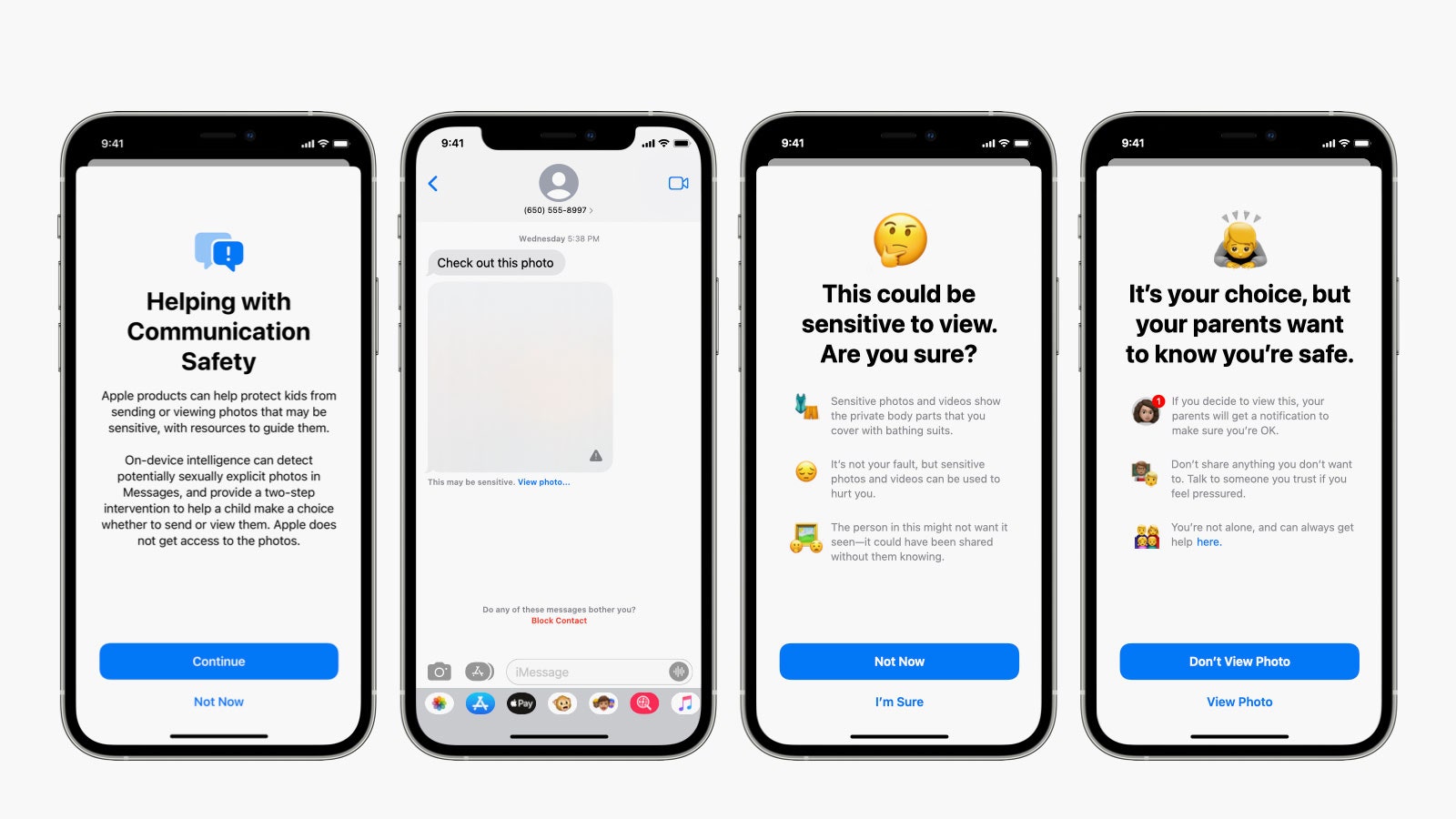

Per Apple’s feature announcement, the company is "introducing new child safety features in three areas, developed in collaboration with child safety experts. First, new communication tools will enable parents to play a more informed role in helping their children navigate communication online. The Messages app will use on-device machine learning to warn about sensitive content, while keeping private communications unreadable by Apple.

"Next, iOS and iPadOS will use new applications of cryptography to help limit the spread of CSAM (child sexual abuse material) online, while designing for user privacy. CSAM detection will help Apple provide valuable information to law enforcement on collections of CSAM in iCloud Photos.

"Finally, updates to Siri and Search provide parents and children expanded information and help if they encounter unsafe situations. Siri and Search will also intervene when users try to search for CSAM-related topics. This program is ambitious, and protecting children is an important responsibility. These efforts will evolve and expand over time."

Apple is not alone in its efforts to curb the spread of illegal material. Most cloud providers — Dropbox, Google, and Microsoft, among others — already scan uploaded files for content that might violate their terms of service or be potentially illegal. But Apple has long resisted scanning users’ files in the cloud by giving users the option to encrypt their data before it ever reaches Apple’s iCloud servers.

Privacy is a fundamental human right. At Apple, it’s also one of our core values. Your devices are important to so many parts of your life. What you share from those experiences, and who you share it with, should be up to you.

We design Apple products to protect your privacy and give you control over your information. It’s not always easy. But that’s the kind of innovation we believe in.

— Apple

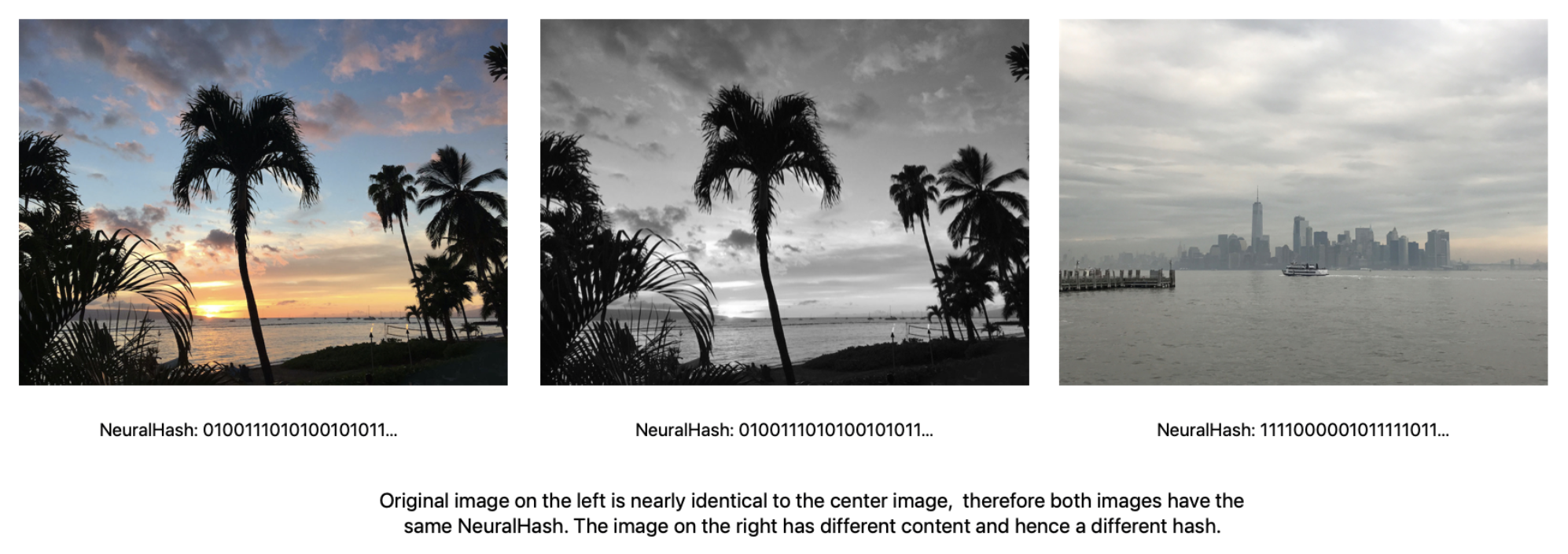

In particular, Apple’s scanning of iCloud Photos has stirred up controversy across the Internet this week. Dubbed 'NeuralHash', Apple’s new system is not a traditional scan of uploaded images. Instead, it is a new form of image analysis called ‘perceptual hashing’ that can match images despite alterations such as cropping or adjustments such as brightness, exposure and saturation.

To prevent evasion by users, the system does not download direct known hashes of illegal content to the user’s device. Rather, cryptographic techniques such as Private Set Intersection (PSI) and Threshold Secret Sharing (TSS) are implemented to prevent distinct knowledge of the image content.

Private Set Instersection allows Apple to learn how two images hash sets are closely related, and how the hashes of two images intersect, but does not provide any additional information regarding the hash sets outside of the intersection.

The system begins by setting up the matching database using the known illicit image hashes provided by National Center for Missing & Exploited Children (NCMEC) and other child-safety organisations. First, Apple receives the NeuralHashes corresponding to known illicit material from the above child-safety organisations. Next, these NeuralHashes go through a series of transformations that includes a final blinding step, powered by elliptic curve cryptography. The blinding is done using a server-side blinding secret, known only to Apple. The blinded illicit content hashes are placed in a hash table, where the position in the hash table is purely a function of the NeuralHash of the illicit image. This blinded database is securely stored on users’ devices.

Apple states that if a user image hash matches the entry in the known hash list of illicit material, then the NeuralHash of the user image exactly transforms to the blinded hash if it went through the series of transformations done at database setup time. Based on this property, the server will be able to use the cryptographic header (derived from the NeuralHash) and using a server-side secret, can compute the derived encryption key and successfully decrypt the associated payload data.

If the user image doesn’t match, the above step will not lead to the correct derived encryption key, and the server will be unable to decrypt the associated payload data. The server thus learns nothing about non-matching images.

Threshold Secret Sharing is a cryptographic technique that enables a secret to be split into distinct shares so the secret can then only be reconstructed from a predefined number of shares (the threshold). For example, if a secret is split into one-thousand shares, and the threshold is ten, the secret can be reconstructed from any eleven of the one-thousand shares. However, if only ten shares are available, then nothing is revealed about the secret.

Once a certain number of photos are detected, the photos in question will be sent to human reviewers within Apple, who determine that the photos are in fact part of the illicit database. If confirmed by the human reviewer, those photos will be sent to the NCMEC, and the user’s account will be disabled.

While the technical implementation of the system adheres to sound cryptographic principles in its documented design and implementation, privacy critics and security experts argue that by adding any sort of image analysis to user devices, that Apple has also taken a dangerous step toward the slippery slope of surveillance and watered down its strong stance on user privacy in the face of pressure from law enforcement.

The Electronic Frontier Foundation (EFF) has released a statement highlighting their beliefs that by implementing this system, Apple is opening a backdoor in its highly touted privacy-first operating systems:

"It’s impossible to build a client-side scanning system that can only be used for sexually explicit images sent or received by children. As a consequence, even a well-intentioned effort to build such a system will break key promises of the messenger’s encryption itself and open the door to broader abuses.

"To say that we are disappointed by Apple’s plans is an understatement. Apple has historically been a champion of end-to-end encryption, for all of the same reasons that EFF has articulated time and time again. Apple’s compromise on end-to-end encryption may appease government agencies in the U.S. and abroad, but it is a shocking about-face for users who have relied on the company’s leadership in privacy and security.“.

Cryptography experts, such as Matt Green from Johns Hopkins University, have also expressed concerns not with the implementation of the system, but the potential for such a system to be extended to detect photos on devices that don’t get uploaded to iCloud, thereby invading the privacy of the user’s offline storage:

“Regardless of what Apple’s long term plans are, they’ve sent a very clear signal. In their (very influential) opinion, it is safe to build systems that scan users’ phones for prohibited content. Whether they turn out to be right or wrong on that point hardly matters. This will break the dam — governments will demand it from everyone. And by the time we find out it was a mistake, it will be way too late.”

For now, Apple’s new detection features, which the company says have been in development for several years and weren’t built for Governments to monitor citizens, are strictly limited to the United States. NeuralHash will debut in iOS 15 and macOS Monterey, slated to be released in the coming weeks.

People have the right to communicate information privately without backdoors or censorship, including when those people are minors. Apple should make the right decision by its customers — keep these backdoors out of users’ devices.